It must be infuriating to be in a situation where there’s a clear problem, and all the obvious remedies continually fail to produce any legitimate result. That’s what this blog is like: every month rolls by with some half baked ideas partially implemented and barely documented. And in the last day of the month, there’s a kind of panicked scramble to fulfill an entirely arbitrary self-enforced quota just to convince myself that I’m doing stuff.

Anyway, it’s pretty rare for me to be doing absolutely nothing, but mustering the effort to actually complete a project to an appreciable extent is pretty hard. This might be in some way indicative of a kind of shift in the type of projects that I try to work on- they’re generally somewhat larger in scope or otherwise more experimental. And while I may have been notorious before for not leaving projects at a well documented and completed state, these new ideas often languish much earlier in the development process.

There’s always pressure to present things only when they are complete and presentable, because after all timing is key and nothing can be more detrimental to an idea than ill-timed and poor execution. But at the same time, I think the point of this blog is to create a kind of virtual paper trail of an idea and how it evolves, regardless of whether or not it falters in its birth.

With that said, I’m going to create a somewhat brief list of projects and ideas that I’m currently experimenting with or simply have yet to publish a blog post for.

- One of the earlier entries of the backlog is a HTML5 scramble with friends clone, acting highly performant on mobile with touch events and CSS animations while supporting keyboard based interaction on desktop. I’ve always been intending to build some kind of multiplayer real time server component so that people could compete in real time. Maybe at some point it’ll be included as a kind of mini game within protobowl.

- The largest project is definitely Protobowl, which has just recently passed its one year anniversary of sorts. It’s rather odd that it hasn’t formally been given a post on this blog yet, but c’est la vie. Protobowl is hopefully on the verge of a rather large update which will add several oft-requested features, and maybe by then I’ll have completed something which is publishable as a blog post.

- Font Interpolation/Typeface Gradients. I actually have no idea how long this has been on my todo list (years, no doubt), but the concept is actually rather nifty. With attributes like object size or color, things can be smoothly interpolated in the form of something like a gradient. The analogue for this kind of transition when applied to text would be the ability to type a word whose first letter is in one font, and the last letter being another font, with all the intermediate letters some kind of hybrid. I never did get quite far in successfully implementing it, so it may be a while until this sees the light of day.

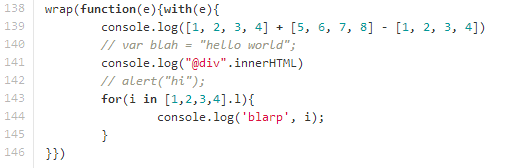

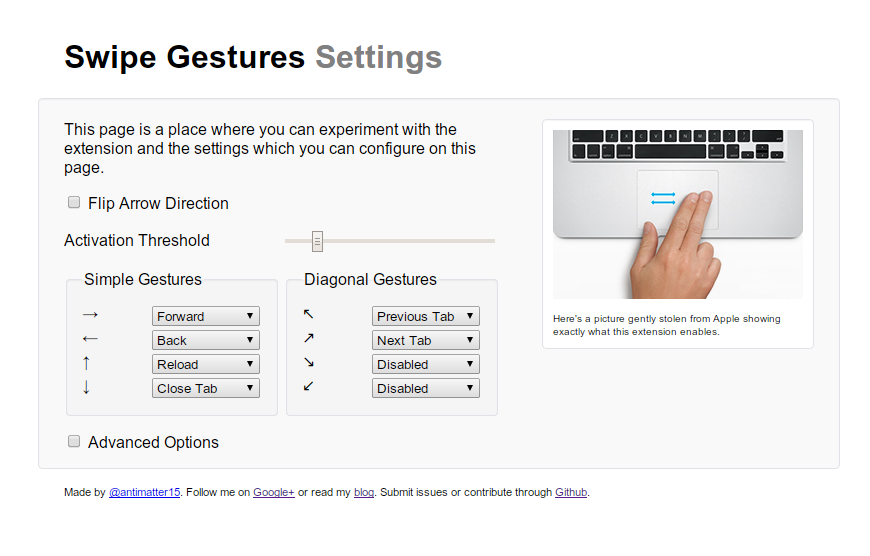

- I’ve always wanted to build a chrome extension with some amount of OCR or text detection capabilities so that people could select text which was embedded within an image as if it weren’t just an image. At one point I narrowed down the scope of this project so that the OCR part wasn’t even that important (the goal was then just some web worker threaded implementation of the stroke width transform algorithm and cleverly drawing some rotated boxes along with mouse movements). I haven’t had too much time to work on this so it hasn’t gone too far, but I do have a somewhat working prototype somewhere. This one too is several years old.

- In the next few days, I plan on publishing a blog post I’ve been working on which is something of a humorous satire on some of the more controversial issues which have arisen this summer.

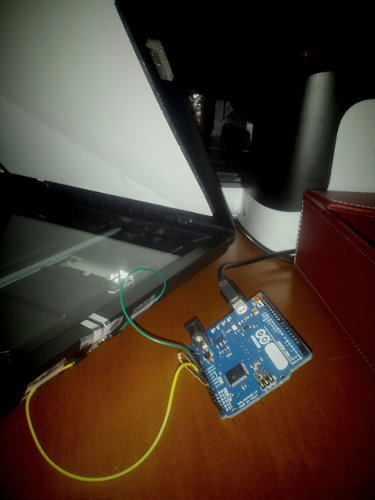

- And there are several projects which have actually gotten blog posts which weren’t themselves formal announcements so much as a written version of my thinking process behind them. I haven’t actually finished the Pedant, in spite of the fact that the hardware is in theoretically something of a functional state (I remember that I built it with the intent that it could be cool to wear around during MIT’s CPW back in April, but classes start in a few days and it hasn’t progressed at all since). Probably one of the most promising ideas is the kind of improved, vectorized and modern approach to video lectures- but that too needs work.

- I’m building the website for a local charity organization which was started by a childhood friend, and maybe I’ll publish a blog post if that ever gets deployed or something.