Cooley-Tukey FFT + DCT + IDCT in under 1K of Javascript 30 May 2015

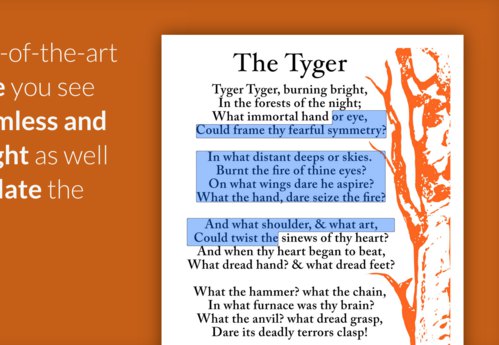

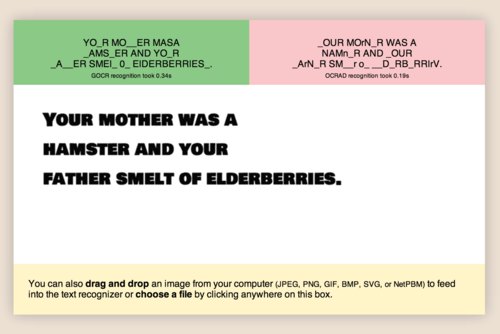

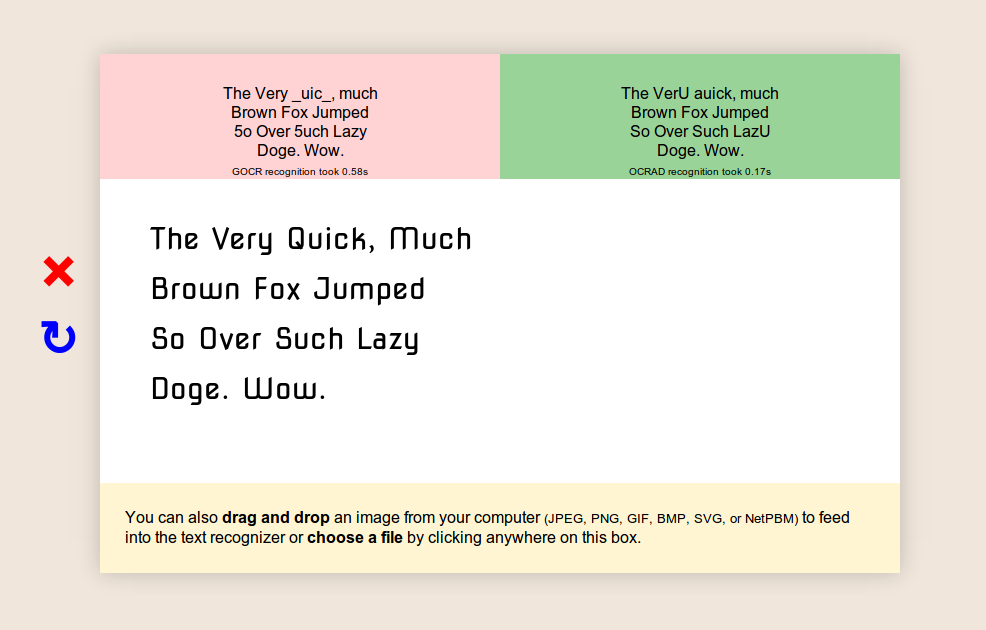

Almost a year ago, I wrote a simple script for computing perceptual hashes in Javascript (it’s going to be part of a forthcoming Naptha feature which allows it recognize source images for memes and do perfect inpainting). One step of the process is computing the 2D Discrete Cosine Transform of a shrunken version of the image.

The problem is that step was kind of ridiculously slow, and it’s not really hard to see why: there’s a series of four nested for loops— because that’s the obvious naive way for computing the DCT.

In practice, it’s actually not all that bad because you only have to do it once per image, and the dimensions of the source image are a fixed 32x32. But it still felt pretty bad, so when I was looking over the code again earlier this week, I tried doing some research to figure out a faster way to do the DCT.

Surely you’d imagine that there have to be extraordinarily efficient implements of the DCT out there, given that it’s the very foundation of JPEG out there. And you’d be right, but they’ve generally unrolled the butterfly into a series of bit-shifts which are quite unlikely to generalize beyond the 8x8 block size.

I’m reasonably confident that FFTW3 has a fast DCT implementation which also is capable of generalization, but it seems buried within quite a lot of code.

MiniFFT

This isn’t a super performant implementation, but it’s not horrendously slow and it doesn’t use recursion. It’s probably pretty trivial to make it quite a bit faster by precomputing sine and cosine tables. This uses the standard Cooley-Tukey radix-2 decimation-in-time algorithm, which means it only works for one dimensional arrays with a length which is a power of two.

It doesn’t check that the length is a power of two, it’ll just give you the wrong answer. You should definitely check that it’s true beforehand.

function miniFFT(re, im) {

var N = re.length;

for (var i = 0; i < N; i++) {

for(var j = 0, h = i, k = N; k >>= 1; h >>= 1)

j = (j << 1) | (h & 1);

if (j > i) {

re[j] = [re[i], re[i] = re[j]][0]

im[j] = [im[i], im[i] = im[j]][0]

}

}

for(var hN = 1; hN * 2 <= N; hN *= 2)

for (var i = 0; i < N; i += hN * 2)

for (var j = i; j < i + hN; j++) {

var cos = Math.cos(Math.PI * (j - i) / hN),

sin = Math.sin(Math.PI * (j - i) / hN)

var tre = re[j+hN] * cos + im[j+hN] * sin,

tim = -re[j+hN] * sin + im[j+hN] * cos;

re[j + hN] = re[j] - tre; im[j + hN] = im[j] - tim;

re[j] += tre; im[j] += tim;

}

}

The transformation happens in place, so it’ll modify whatever arrays you pass in.

var re = [1, 2, 3, 4],

im = [0, 0, 0, 0];

miniFFT(re, im);

console.log(re, im); // [ 10, -2, -2, -2 ] [ 0, 2, 0, -2 ]

Minified, it’s only 359 bytes. Unlike this other contender for the dubious prize of smallest javascript FFT implementation, it doesn’t require a special complex number library three times its size (though this doesn’t fit in a tweet— I’ll be really impressed if someone manages to do that).

I haven’t really golfed the code to the smallest size possible, but I think it’s at a reasonable balance of brevity while still being somewhat comprehensible.

It’s based on an in-place FFT from Princeton’s intro CS class Project Nayuki’s FFT.

MiniIFFT

Turns out that you can invert the FFT modulo a scaling factor just by swapping the real and imaginary arguments.

function miniIFFT(re, im){

miniFFT(im, re);

for(var i = 0, N = re.length; i < N; i++){

im[i] /= N;

re[i] /= N;

}

}

MiniDCT

This is an implementation of a Type-II DCT using MiniFFT. For details about Makhoul’s algorithm for transforming a DCT into an FFT, see this post

function miniDCT(s){

var N = s.length,

K = -Math.PI / (2 * N),

re = new Float64Array(N),

im = new Float64Array(N);

for(var i = 0, j = N; j > i; i++){

re[i] = s[i * 2]

re[--j] = s[i * 2 + 1]

}

miniFFT(re, im)

for(var i = 0; i < N; i++)

s[i] = 2*re[i]*Math.cos(K*i)-2*im[i]*Math.sin(K*i);

}

Like MiniFFT, it operates in-place (well, not really, but it writes its output to the source array).

var arr = [3, 4, 1, 7];

miniDCT(arr);

console.log(arr); // [ 30, -5.09493566, 7.0710678118, -8.604744653 ]

It produces the same output as scipy’s fftpack, but Matlab’s DCT uses orthogonal normalization and produces a different result. However, it’s pretty simple to do it the Matlab way— just scale everything by 1/sqrt(2 * N) and divide the first element by sqrt(2).

function matlabDCT(arr){

miniDCT(arr)

arr[0] /= Math.sqrt(2);

for(var i = 0, N = arr.length; i < N; i++) arr[i] /= Math.sqrt(2 * N);

}

MiniIDCT

I really considered renaming this series of functions into TinyFFT and friends because of the predicament of MiniIDCT— yeah, exactly— the horror— repeating I’s.

This is an implementation of a Type-III DCT, also known as the IDCT because it happens to be the inverse of a Type II DCT (frequently refered to as “The DCT”).

function miniIDCT(s){

var N = s.length,

K = Math.PI / (2 * N),

im = new Float64Array(N),

re = new Float64Array(N);

re[0] = s[0] / N / 2;

for(var i = 1; i < N; i++){

var im2 = Math.sin(i*K), re2 = Math.cos(i*K);

re[i] = (s[N - i] * im2 + s[i] * re2) / N / 2;

im[i] = (im2 * s[i] - s[N - i] * re2) / N / 2;

}

miniFFT(im, re)

for(var i = 0; i < N / 2; i++){

s[2 * i] = re[i]

s[2 * i + 1] = re[N - i - 1]

}

}

This is based on Tom Grydeland’s IDL implementation of the IDCT.

Let’s start with a 8 element array of real numbers (DCT values).

[A, B, C, D, E, F, G, H]

From that it generates the corresponding complex values. Note that the real part is intact, and the complex part is the original array with its head chopped off and the rest of it flipped around and attached to a water balloon.

[A, B, C, D, E, F, G, H] - j[0, H, G, F, E, D, C, B]

Then the complex values are rotated by exp(i * pi / (2 * N)) to get spectral components. After an IFFT that gets transformed into:

[a, h, c, f, e, d, g, b]

It took me a while to see what was going on there, but the even indices are map to the first half of the results, and the odd ones map to the second half in reverse

Scrambled a h c f e d g b

Even Indexed a c e g

Odd Indexed h f d b

Odd Reversed b d f h

Unscrabmled a b c d e f g h