This site is going down tomorrow in protest of SOPA/PIPA 17 January 2012

Not that anyone is going to particularly miss my blog (most of my other web pages will still be up because I’m far too lazy to go through all of them in order to make a slightly less futile protest). But this is one of the most interesting internetwide events that I’ve ever encountered, and I should be shamed to not take part in it. Copyright and its ongoing war with libertarian and anarchist mentalities has fascinated me ever since squinting through Free Culture on my iPhone more than five year ago. Going to Jonathan Zittrain’s Free Culture X keynote a few years ago was fun too.

I’m sure most would feel uncomfortable with my characterization of this as some cyberlibertarian movement, but I think it’s an entirely excusable position for someone living through a period of adolescence characterized by rebellion and Ayn Rand. It’s certainly not the only perspective, but it seems the most poignant and consistent, not that consistency is necessarily a goal of any legislative body. Legislative bodies are meant, to borrow from programming jargon, to monkey-patch a framework that ill-approximates the societal expectation of government.

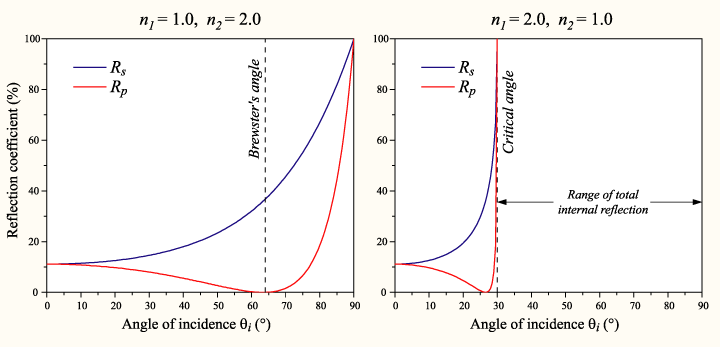

Now excuse me while I contrive a metaphor that relates to something that I don’t know much about and will seem cringe-worthy to anyone knowledgeable in the subject. Lets say that the Earth is the shape of what the ideal government is. Not ideal in a sense that it’s perfect and elegant, but in the sense that it is empirically derived through the scientific process of experiment. But in large part, this mass conforms to a very general principle (maximizing individual freedoms). I would characterize this is approximating the earth as a sphere. For nearly all applications, this rather crude geometric model works astonishingly well, leading some to even believe that this is what the world actually is. That everything else, the obsession with finding imperfection is actually a delusion and everything comes from measurement error (well, that’s when this metaphor breaks down because nobody actually does this). Legislators shape the body of law to conform with the needs of society by poking and adding ridges to where the overlay is incongruous. They do the passive activity of fixing the model, the geoid, to incredible precision. But there’s a more radical way, molding the earth into what our model was. We can forget about the lapses, and soon enough the surface of hte earth will erode down into that perfect sphere of ideology.

There. I think I’ve fulfilled my obligation to write some words with some ostensible meaning with regard to something that pertains rather dearly to my life. If this passes and we descend into a dystopian nightmare reminiscent of 1984, at least I’ll have something to look back on. An old man filled with regret waiting to die alone. Or at least without an internet. I did my best (but still far from enough, as is anything besides martyrdom) to preserve crowd immunity to hazardous legislation, but alas we were stricken.