Experimental Ambient Light Parallel Reflection Optical Multitouch Overlay Proof-Of-Concept Implementation Version 2 22 March 2009

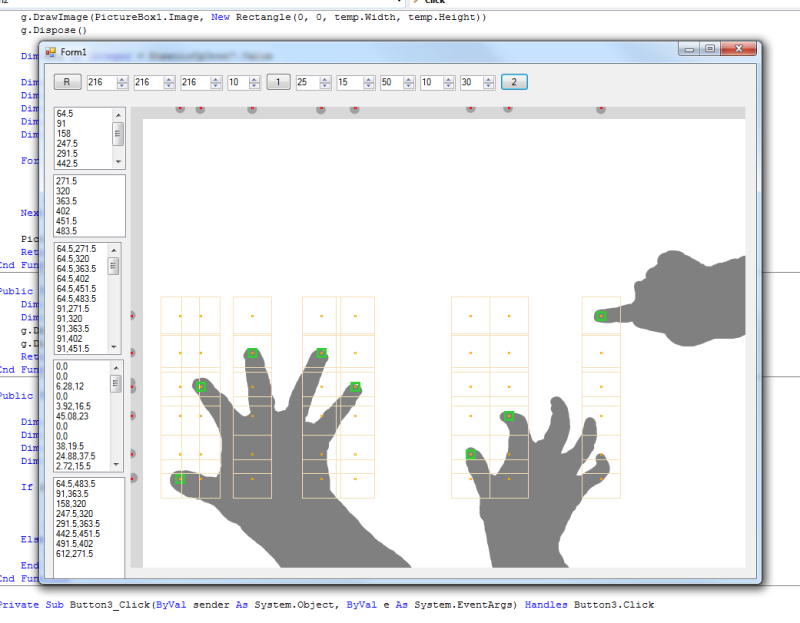

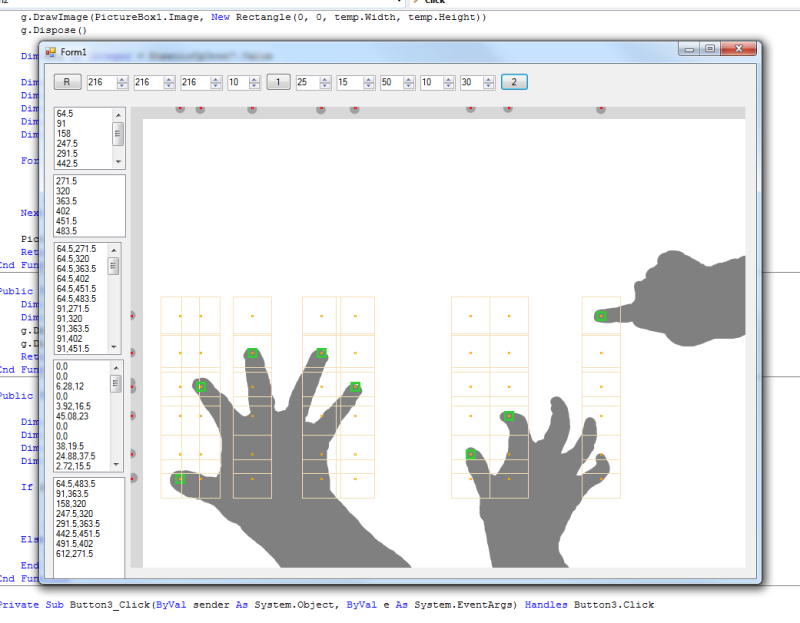

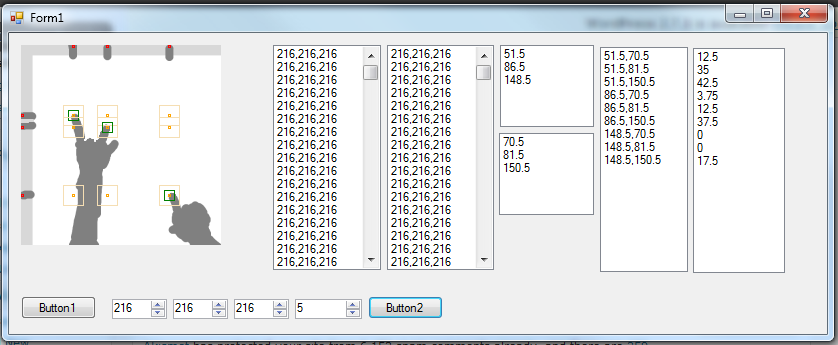

Early on, I recognized one of the biggest issues with my idea for using mirrors was the computational power necessary to run the finger-position-detection algorithm. I recently thought, that that would be totally superfluous. My new idea is to use software to search a 1-pixel wide band of the mirrors to create several points. Those points are all combined to a list of all possible permutations. Each point goes through a method of determining whether or not it’s a fingertip. The easiest way, (and likely quite wildly inaccurate in the real world), is to measure the perimeter of a square that has that point of the center and compare it to the percent of that perimeter which is different from the surroundings. So then, you find the ones which work at all, and then you have your points!

I actually made a rough proof-of-concept system for this. It uses a very crude method of determining the different blobs on the mirrors (contiguous same color). And it uses a very crude surrounding box perimeter-ratio system. It’s to serve as a proof-of-concept type thing, not necessarily the precursor to an actual program that does something along the lines of it.

As for how fast it is, i’m not sure. I don’t even know how things like touchlib do it. If they scan through every pixel, and do more processing, then this is easily 50x faster. The speed of this is very largely dependent on the number of fingers touching it. w+h+4bf^2 would be a rough approximation of how many pixels would be needed to processed to get the result (w = width resolution, h = height resolution, b = size of surrounding box, f = number of fingers). On the Proof-of-concept, the input data is 200x200, The box set to a width of 20px, and there are 3 fingers touching, meaning ~1120 pixels searched. And if you were to scan through all the pixels (as I originally thought the idea would require), it would be wh, or 200*200, or 40,000. So the speed increase is by a factor of 36x, which is totally awesome. But again, I don’t know how others do it, they may have already an even faster way. But last year, I made a sort of object-tracking thing, which worked by scanning every pixel, and it was able to work at quite decent speed. So this, being an order of magnitude faster should work better.

Of course this is still a concept. There are still huge flaws not yet covered for like the fact in the real-world, the software would have to soemhow distinguish between the contents on the monitor and the hand in front. There may be a chance that someone is in an awkward position which tricks the software, the fact the software is completely useless on just about anything other than a fingertip, and many many more. I still find it interesting anyway :P

Subleq is a OISC, or one-instruction-set-computer. It’s turing complete, and implementing a VM for it is no more than possibly 10 lines of any code.

I made a purtyful VM which displays the memory and colorizes real-time diffs against a real-time running program.

http://antimatter15.110mb.com/misc/purtyleq.htm

it’s currently running http://99-bottles-of-beer.net/language-oisc-1395.html

So I’ve been thinking about a new design for a Multitouch system. I’ve googled it a bit, and it seems original.

Right now, there are a couple popular multitouch designs. The most popular one right now is probably FTIR, or Frustrated Total Internal Reflection. This is the one used by Jeff Han in his TED demonstrations. There are several variations of FTIR, like Diffused Surface Illumination. Then there is Diffused Illumination, which powers the Microsoft Surface, and a variation of DI is Front DI (where the light source is in front) like the simple DIY MTmini system (where the light source is ambient light). The problem with FTIR, DSI, and DI is that they require the camera to be in the back of the screen. This makes it impossible to retrofit a surface.

The Wiimote tricks by Johnny Chung Lee aren’t exactly multitouch. They involve wearing special things to interact. They are interesting nonetheless, but not true multitouch. It’s virtually a completely different market, though the Wiimote IR camera may be used with the LaserTouch system theoretically instead of the camera (I was planning to try this out originally).

Laser Light Plane, or LLP is usually similarly used as the ones above. A variant of LLP is the Microsoft Research LaserTouch system (apparently used in Touchwall as well). In LLP, a laser hooked up to a line generator creates a “plane” of infrared light only millimeters above the surface. When something interacts with that plane, light is scattered in all directions. Most systems take light from the bottom, but LaserTouch looks at the light from the top. Wherever your finger touches the plane, it appears to have something like a thin halo around it.

LLP is interesting (especially the LaserTouch variant) because it allows for comparatively really cheap multitouch. The Aixiz 780nm 5-10mW laser (the one(s) most commonly used around nuigroup for LLP rigs). cost less than $10 (though normally 4 or so are used together, and buying goggles for protection from the dangerous light may cost close to a hundred, and the visible light filter is also a slight tax, along with disassembling a webcam to remove the IR filter, making it closer to the $100 estimate by microsoft).

Well, I have a relatively simple idea. You just have a very thin mirror angled just right off to the side of the surface (actually, 2 mirrors, for two coordinates). You are probably thinking that this is only going to be like the normal single-touch systems, which suffer from not being able to detect where you actually pushed when there is multiple points. Actually, the mirrors are only used to determine whether you’ve contacted the screen yet. The position is determined by some magical image processing that hasn’t been implemented yet.

So what do you think of this idea? Did I explain it enough? I’m probably gonna elaborate on this later.

Why? I donno. Wikis are awesome. I just want to see how the wiki concept could work for a site like this.

It’s not going to replace my blog, but it may be used for some documentation and stuff

This is somewhat random, but i’m 13 years old. I started the ajax animator 2 years ago. I’ve always sorta kept my age somewhat secret, maybe out of paranoia. I still feel sorta awkward blogging about this now.

So I just tried Safari 4 Beta, and I’m quite impressed, but it’s strange that it’s almost exactly like Chrome. It’s like Chrome’s not only based on Webkit, but Webkit is based on chrome. Sorta like Vista = OS X, Gadgets/Widgets, Search/Spotlight stuff. I’m somewhat annoyed about not being to close tabs with middle click, and how, unlike Firefox+TabKit, it only has that small possible tab space. Especially since I have a dysfunctional mental garbage-collection system, so I easily have >20 tabs at a time.